Welcome

Welcome

Battle of the AIs - which wins the research war?

On Saturday, I posted some quick thoughts on the UK government appointing the COO of News UK as Permanent Secretary for Communications. It blew up, and, at the time of writing, has more than 50 comments. My quick take questioned how wise it was to appoint someone who isn't a communications professional to such a senior role. There was also a lively discussion in David Gallagher's WhatsApp community.

Most commentators had similar views or questions to mine. However, quite a few took exception to the fact that he was the editor of The Sun before taking on his management role at News UK. Personally, I think that's a plus, not a negative. My worry is that once again this appears to be senior leaders not understanding strategic communications and equating it to media relations, so thinking a journalist, with little communications experience, is best equipped to do it.

I've also written about a quick experiment where, instead of the old vanity search on Google, I asked AI. Or rather lots of AIs so I could compare the results. The benefit of asking about yourself isn't just ego, but that I can instantly assess the accuracy and spot hallucinations. Read the article to see the winners and losers.

There is yet another report out on GEO - or whatever we call it. This one's from Muck Rack. There's a lack of definitive data on just how AI is impacting search, reputation and brands, but one thing that's certain is that it is. We've been working on this since February 2024 (the first reference I can see in one of our decks), but it's only at the end of last year it began to explode.

The AI arms race is showing no signs of slowing, with Microsoft making waves—both by pledging to back the EU’s voluntary AI code of practice and by poaching top talent from Google DeepMind to bolster Copilot. Meta, meanwhile, is charting its own course and giving the Brussels guidelines a pass. In the midst of regulatory uncertainty, smart communicators need to be getting “regulatory ready” and doubling down on trust and ethics.

Are you putting emerging AI tools to the toothbrush test? Google co-founder Larry Page’s mantra—will you use it twice a day?—is guiding which AI platforms stick in our working lives. Early adopters and AI advocates appear to love ChatGPT, Perplexity and Claude. But for most if AI is going to become a daily habit, it's Copilot and Gemini that are likely to become second nature as they are right there in the apps they are already using.

As a gadget geek, I want a desktop robot and really hope that UK AI voice cloning company Synthesia has cracked UK regional accents, as I don't want to sound like a posh southerner!

If you’re tracking the seismic shifts in media, and if you're not you should be, don’t miss The Atlantic CEO Nick Thompson’s fascinating interview with Azeem Azhar. It's got everything from content licensing deals with OpenAI, the crumbling of the search-and-click model as AI overviews eat referral traffic, and candid advice on staying visible in a fractured media environment. For corporate communicators, understanding how AI and algorithms curate and amplify what’s said about your brand has never been more critical.

This issue also spotlights creative crisis comms (yes, Coldplay and clever humour do mix!), new tools for detecting deepfakes, and hard data on how AI impacts sustainability—hint: training, not prompting, is the culprit.

All that, plus tips on using AI ethically for PR pitching, and a stonking Early Bird deal for the 2026 Davos Communications Summit.

News

News

Watch the AI video summary or listen to the podcast

You can watch my AI video avatar reading a summary of this edition or PR Futurist or listen to an AI generated radio show about its contents on the podcast version. The podcast show was created by using Notebook in Microsoft 365 Copilot.

Help us out and take a few minutes to complete this survey

Folgate Advisors is running a quick survey to understand more about the needs of senior communications professionals. It only takes a few minutes. Thanks.

AI

AI

Microsoftbacks EU AI code of practice, Meta rejects them

Microsoft has said that it will likely sign the EU’s AI voluntary code of practice. In contrast, Meta has rejected the guidelines. The lack of regulation about AI means that AI governance and policies need to be 'regulatory-ready' to anticipate what might happen. Microsoft is already the first choice AI for many because of its privacy and security, so this move strengthens its position as the safest choice for AI.

Does your AI pass the toothbrush test?

The toothbrush test was devised by Google founder Larry Page. Is your product good enough for people to use at least twice a day? This makes it a daily habit.

Even if a specialised tool would be perfect for your need, if it’s something you’re only going to use every once in a while, you'll probably you'll forget they exist.

That's one of the reasons why Microsoft 365 Copilot and Gemini in Google Workspace are effective AI tools. It doesn't matter if other tools are more powerful, have better features, are easier to use or any other benefits. If they aren't essential to day to day work and second nature then most people won't use them.

Image generated by Microsoft Copilot.

Do you want you own desktop robot? I do!

I'm constantly expanding the envelope to explore the future of public relations... okay, I'll admit it, I'm a sucker for shiny, new gadgets so I want to get my hands on a Reachy Mini. It's a new $449 desktop robot "designed to bring AI-powered robotics to millions."

I've absolutely no idea why or what I'll do with it. But figuring it out is part of the fun, and I might actually come up with a practical idea.

"The robot features six degrees of freedom in its moving head, full body rotation, animated antennas, a wide-angle camera, multiple microphones and a 5-watt speaker. The wireless version includes a Raspberry Pi 5 computer and battery, making it fully autonomous."

Microsoft poaches Google DeepMind AI talent as it beefs up Copilot

Big tech companies are in an arms race to recruit the leading minds in AI development. The latest is a CNBC report about Microsoft's Mustafa Suleyman hiring around two dozen employees from Alphabet’s Google DeepMind artificial intelligence research lab. Suleyman was one of the original founders of British AI firm DeepMind before it was acquired by Alphabet/Google.

As Google loses key staff to Microsoft it has been on an expensive hiring spree hiring the CEO and other employees of AI coding startup Windsurf in a $2.4 billion deal.

OpenAI CEO Sam Altman claims Meta has offered his employees upto $100 million signing bonuses.

MIstral AI shares sustainability data

Everyone thinks the rapid growth of AI is having a negative impact on sustainability. That's where consensus stops, as there is a distinct lack of definitive data with robust, transparent methodologies to measure exactly what that impact is.

European AI large language model Mistral has published its own data which it claims is its "contribution to a global environmental standard for AI". However, despite being peer reviewed the data still isn't particularly clear.

It appears to show that most of the emissions and water consumption is for training, with use by users of prompts having a much lower impact. However, it reports 'Model training and inference' together as 85.5% of GHG emissions and 91% of water consumption. The problem is inference is impacted by user prompts, so it's not possible to see the split between model training and inference.

I engaged with a lengthy chat with AI (Copilot) to analyse the page and see if my interpretation of the data was correct. It concluded:

Your first statement is largely correct for this Mistral AI model—most emissions and water use are from training. - Inference (users’ prompts) has a much smaller impact per use, but can accumulate with high frequency or very large user bases.

Here's the nuance:

- Training is the big, one-time event where most emissions and water use occur. For Mistral Large 2, this training accounted for 20,400 tonnes CO₂e and 281,000 m³ of water over 18 months.

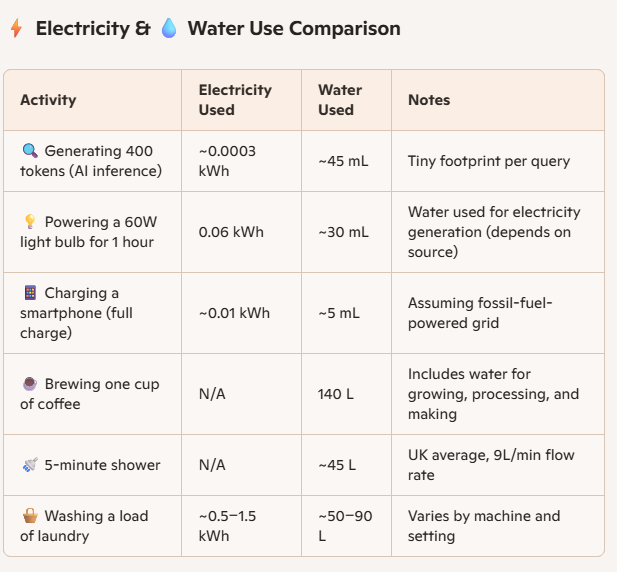

- Inference is what happens every time a user interacts with the model. Each prompt uses a small amount of energy and water (e.g., 1.14 g CO₂e, 45 mL water for a 400-token reply)—far less than training, but this can add up over millions or billions of queries.

I then asked it to compare a user prompt that created a one-page result with other uses of electricity and water. A 60w bulb running for an hour uses approximately 200 times as much energy as a single prompt.

The other results are shown in the image (they have not been separately checked by an expert).

Diginomica recently published an interesting article on AI and energy: Tackling the AI energy hog - from nuclear power to intelligent chips and smart data inputting.

AI and the future of media – Azeem Azhar interviews The Atlantic CEO, Nick Thompson

Nick Thompson, CEO of The Atlantic, is one of the smartest thinkers I've heard on how the publishing industry and big tech AI can coexist to mutual benefit and for the good of society and the economy.

It's well worth listening to this fascinating, wide-ranging interview with Azeem Azhar. Some of the key themes covered include:

🧠 How AI Is reshaping media strategy

- How in early 2024 The Atlantic was among the first publishers to license content to OpenAI, and how this might be one of the new models for monetising journalism through partnerships with AI platforms.

- The media ecosystem is undergoing a transformation from centralised editorial control to distributed, algorithmically driven discovery models.

📉 Trust, attention and audience fragmentation

- The erosion of public trust in traditional media, coupled with the rise of niche creators and influencer-driven content channels.

- PR and communications professionals must grapple with attention economics—earning trust and visibility amid fragmented audiences and AI-curated feeds.

📢 Implications for corporate communicators

As media platforms shift toward AI-assisted personalisation, the relevance and discoverability of corporate narratives will increasingly depend on how well they're optimised for machine reading, indexing, and summarisation. Communicators should consider partnerships with media outlets who are experimenting with ethical AI integration, as well as prioritising transparency about how information is sourced and shared.

🔍 The business model shake-up

- Licensing models (like The Atlantic's deal with OpenAI) may provide a roadmap for content owners looking to retain IP value while enabling AI innovation.

- This opens doors for branded content strategies that work symbiotically with AI systems rather than competing with them.

The interview also covers: journalism’s four horsemen; the collapse of search; Cloudflare’s counterattack; is this the search-traffic fix?; rise of the sovereign creator; do great writers need editors?; why conservatives win new media; how Substack drives discovery; East Coast vs. West Coast ethics; how he personally uses AI in writing; is AI friend or foe to journalism?

I met Nick Thompson and heard him speak at the PRovoke Global Summit in Washington DC in November 2023 and his ideas contributed a lot to my own thoughts on the future of content and publishing in an AI era. He offers a rational view of what a fair relationship between AI companies and publishers should look like, so both work together to benefit themselves and people, instead of just their own self-interest.

A creative writing professor's essay offering a smart take on AI

Too often we see the tin hat, frothing at the mouth rants against AI or the gushing evangelists raving about AI. This refreshing essay offers a thoughtful, balanced perspective. It's by Meghan O'Rourke, the editor in chief of The Yale Review.

I found it via Nick Thompson, CEO of The Atlantic, who said: "This is the best essay on writing with AI that I’ve read."

Corporate affairs

Corporate affairs

Is AI on your risk register?

America's largest companies are listing AI among the major risks they must disclose in formal financial filings, despite confident statements and bullish predictions about AI's potential opportunities. A report from research firm The Autonomy Institute, says three-quarters of companies S&P 500 index have updated their official risk disclosures to detail or expand upon mentions of AI-related risk factors during the past year.

Risks that corporate affairs professionals need to be aware of and prepare for go far beyond the obvious security and privacy ones, which are the main focus of most IT teams. There is also the rapidly growing threat of disinformation, misinformation and deep fakes.

But potentially the biggest risks are the ethical and reputational risks created by companies themselves because they don't have a comprehensive enough AI strategy and governance, instead have one primarily created by IT and legal. There is also the risk that reputations and brands are now being shaped and informed by AI answers, eclipsing traditional search.

CommTech tools

CommTech tools

What does AI say about you?

What does AI say about you? Is asking AI to research you the new Googling yourself?

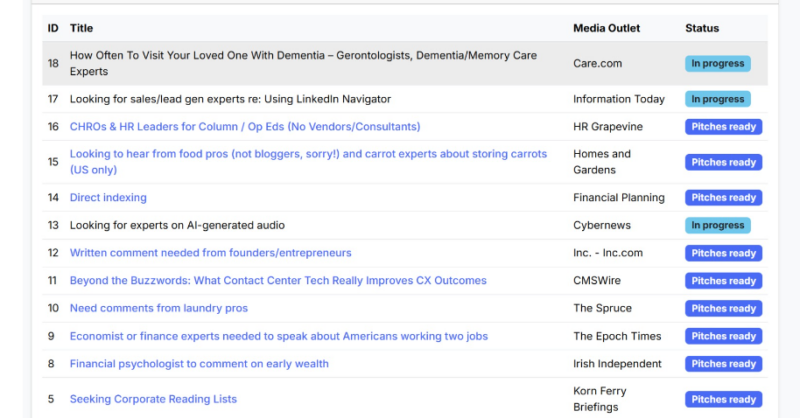

I asked all of the major AI large language models the same question and wrote an article about the results.

The exercise wasn’t intended to be a robust comparison of the research capabilities of different large language models. I did a vanity search of my name as it was the easiest and fastest to check for accuracy.

Read the article for the full comparison of number of words, mistakes, sources with my review and analysis of accuracy, with links to full results.

The quick summaries were:

Perplexity - Best for quick, accurate research. Copilot - Best for comprehensive, detailed report. Gemini - High risk as made serious mistakes or hallucinations Grok - Doesn’t cite sources, so use with caution. Claude - Better at writing than it is at research. ChatGPT - High risk as made serious mistakes or hallucinations.

New AI voice tool claims to cope with British regional accents

If you've watched or listened to any of my Heygen videos, you'll know that while it does a good job of looking like me, the accent is appalling. It strips out my nondescript northern accent to replace it with a nondescript southern accent.

Now, Synthesia, a UK company, claims it can reproduce a range of accents, outperforming its US and Chinese rivals. Synthesia spent a year compiling its own database of UK voices with regional accents, through recording people in studios and gathering online material.

I've paid for an annual subscription to Heygen so probably have to wait until switching to Synthesia to see if it really can talk the talk.

NewsWhip launches real-time AI monitoring agent

NewsWhip has announced the launch of an AI monitoring agent designed to monitor the world’s news, detect narrative and business risks as they emerge, and provide alerts and context to help communications teams decide when and how to respond.

Paul Quigley, CEO and co-founder of NewsWhip said: “Agentic AI will transform the game for brand and issue monitoring. We expect PR and comms professionals will quickly shift from daily or other periodic media reports, to trusting their “always on” Agent team-mate – telling them what they need to know, when they need to know it."

Crisis communication

Crisis communication

Coldplay hug company uses humour to go viral and change the narrative

We've all seen that hug with the Astronomer CEO and HR head. Most of the world had never heard of Astronomer before that moment. Most of the world didn't need to know its name, as it was a B2B brand with specific target markets.

After silence and missteps to start with, Astronomer didn't play defence, but came out with a full-throttle response.

Instead of the world laughing at it, Astronomer made the world laugh with it.

They consciously coupled up with Gwyneth Paltrow, actor turned self-anointed wellness guru and former wife of Coldplay frontman Chris Martin. Paltrow uses her Oscar-winning acting skills to deliver a deadpan masterclass parody.

In a crisis, not all stakeholders are equal. You're not seeking to repair your reputation with the world. I don't know which stakeholders matter to Astronomer, as, like all the other armchair opinions, I'm not privy to its internal workings and don't know what its primary objectives are. If we assume it is customers, prospects, employees, prospective employees and shareholders, then I'd say this video hits the mark.

Not everyone is a fan, but it doesn't really matter what the general public (most of whom have never heard of Astronomer) thinks if it works with those important stakeholders.

Astronomer reminds its target market what it's really good at and informs the world what it actually does.

It is corporate storytelling at its finest and a rare, great example of using clever humour in corporate communications.

If you want to know more about humour in public relations and communications read this timely 'The Case for Strategic Levity' report from Smoking Gun.

CommTech newswatch

CommTech newswatch

Cyabra launches deepfake detection tool

Cyabra has launched an advanced deepfake detection tool. The AI-powered tool uses proprietary AI models to analyse visual content.

Disinformation and deepfakes consistently appear near the top of threats identified in global risk reports. The most high-profile examples are usually of politicians and elections, but companies are increasingly under threat and many have already been victims.

Corporate communication professionals need to understand disinformation, misinformation and deepfakes. They need to be able to rapidly verify incoming information to protect their brand and corporate reputation from manipulated content. They also need to educate stakeholders on media authenticity.

Cyabra's CEO, Dan Brahmy, describes it as: "one of the biggest technological achievements in Cyabra’s history."

Cyabra can now detect and analyse manipulated media with forensic precision, including fake profiles, harmful narratives, GenAI content and now deepfake visual content. It uses hundreds of different behavioral parameters and "spatio-temporal analysis", to not just identify fakes, but also explain why.

Brahmy explains the 'why' matters because "deepfakes are not created in a vacuum. They’re always part of a bigger picture, a bigger disinformation campaign, a bigger effort to manipulate public discourse."

Research and reports

Research and reports

AI overviews cut clickthroughs by up to 80%

A study by the Pew Research Center, a US think tank, found a huge hit to referral traffic from Google AI Overviews. A month-long survey of almost 69,000 Google searches found users only clicked a link under an AI summary once every 100 times.

A second study by the Authoritas analytics company has found that a site previously ranked first in a search result could lose about 79% of its traffic for that query if results are delivered below an AI overview.

Owen Meredith, the chief executive of the News Media Association, said Google was trying to keep users “within its own walled garden, taking and monetising valuable content – including news – created by the hard work of others. The situation as it stands is entirely unsustainable and will ultimately result in the death of quality information online."

Another report on AI replacing search - from Muck Rack

Another day, another report on GEO or AI as a stakeholder. This time it's from Muck Rack. We've been looking at this since early 2024 (the first mention in a PowerPoint deck was February). There are lots of reports and opinions, all based on different methodologies. The elephant in the room is that AI companies don't disclose how they work and unlike search there aren't the same reliable data sources to base the reports and advice on.

The methodologies of the research report matter because of the lack of data. Some of the issues include:

➡️ Variance between different LLMs (and different models of the same company) ➡️ Variance between phrasing of prompts ➡️ Variance in LLM answers to the same prompt ➡️ Technical - web interface or API. ➡️ Just LLM model answers or blended with real-time search ➡️ Choice of subject for prompts ➡️ Defintion of sources/citations/categorisations

That's why we've been analysing key themes and trends as the detail in reports is too varied and therefore unreliable.

We know some of the key trends/themes of what's important include:

🗞️ Earned media - media coverage - national, trade and specialist media including blogs. 📚 Owned media - stuff we publish ourselves, especially if long-form and structured. 🧑🤝🧑 User generated content - forums, reviews and people sharing their experiences and opinions 🗃️ Structured data - lists, tables, charts, documenation, FAQs etc 🎊 Multiple diverse sources - provide confirmation of same information from multiple places

This is by no means a definitive list, but multiple sources make reference to these themes.

Get in touch if you want advice on what this means for your reputation, brand and communications strategy. The bottom line is that it matters a lot, so you're in trouble if you aren't already thinking about it.

Professional practice

Professional practice

UK government appoints News UK COO as permanent secretary for communications

Weekend LinkedIn posts aren't meant to get this much engagement. But I think I struck a nerve with my quick take on the appointment of News UK COO as the UK government's new Permanent Secretary for Communications. Most people were in agreement with my quick take, with one or two alternative views. Read it on LinkedIn to see all the comments (58 at time of writing).

The Guardian reports that the UK government has appointed a newspaperman as the new permanent secretary for communications. This is surprising and alarming. This is a new role to replace the CEO of the Government Communication Service now that Simon Baugh is departing.

It's surprising and alarming because the previous two incumbents were communications professionals, and David Dinsmore has never even held a professional communications role. Alex Aiken was the first head of GCS and built it up into the global gold standard for government communications (read the OECD report to understand more). Over the last five years, Simon has built on Alex's legacy to ensure GCS remains at the forefront of not just government communications, but excellent communications that beats many private sector and global companies.

David Dinsmore is the ex-editor of The Sun and, more recently, Chief Operating Officer of News UK. I've no doubt he's brilliant at both of these roles. But these are journalism, media and publishing credentials. They are not qualifications for professional communications, which requires expertise in communication strategy, behavioural science, measurement and evaluation to name but some.

It's worrying that it says Keir Starmer interviewed the candidates and was impressed with "Dinsmore’s understanding of modern communication challenges." How would Starmer know? He's a politician and a lawyer. Neither are great qualifications for being able to understand modern communication challenges.

This looks like the age-old problem of leaders who know little about communications equating it with mainstream news, so thinking a journalist is capable of doing it.

This couldn't be more wrong. Journalists transitioning to public relations and communications bring amazing experience and expertise about a small part of the job. But very little of either in most parts of the job.

It's alarming that now neither the head political communicators working for Starmer, or the head civil service communicator are communications professionals.

I wish David Dinsdale well when he starts the new role, and my sincere wish is that he doesn't do too much other than listen to and take expert advice from the professionals he'll be leading.

New AI tool spams journalists using request services - but it could be made to work!

Journalists and the PR industry has reacted negatively to a PR agency selling an AI tool which automatically answers pitches from journalists on services such as ResponseSource, HARO and Qwoted.

The negative reaction isn't surprising, as the tool appears to be AI-automated spam without a human in the loop.

However, much of the criticism overlooks how AI could be used ethically and effectively to improve the PR responses to journalist request services.

A far better approach would be to create an AI agent that would:

- Analyse the requests from all the services to identify those relevant to the company or a PR agency's clients. The matching would be accurate because it would match the request to all of the internal data and information it had about the company pr clients.

- Draft an initial response with a pitch based on actual company expertise and relevance to answer the request.

- Use predictive analytics to see how closely the pitch matched what the journalist and publication usually wrote about and recommend changes to make it fit better.

- A human expert then checks, edits and personalises the pitch before sending it.

If used properly, an AI agent like this should mean journalists receive fewer, but more relevant pitches faster.

Book early for big Davos discount

After the success of this year's Davos Communications Summit, the executive committee of the World Communication Forum Association is making plans for next year's summit.

Why not join us for two inspiring days in the Swiss Alps where top minds in AI, digital marketing, branding, PR and communications come together to shape the future?

📍 April 23–24, 2026 | Davos, Switzerland

🎟️ Early Bird offer – Get 50% off your Standard ticket if you book before 31 August

We're also starting to create bespoke packages for sponsors and take pitches from potential speakers.

Register now to secure your spot at 50% discount.

If you're interested in becoming a partner or sponsor please get in touch.

Look forward to seeing you in Davos!